At present, our research is mainly concerned with the question of how information is integrated from multiple sensory modalities into a coherent perception of the world. This is an exciting time to study perception, because we are amidst a paradigm shift: for more than a century, perception has been viewed as a modular function with different sensory modalities operating largely as separate and independent modules. (As a result, multi-sensory integration has been one of the least studied areas of research in perception.) However, over the last few years, accumulating evidence for cross-modal interactions in perception has led to a new surge of interest in this field, making it arguably one of the fastest growing areas of research in perception, and has swiftly overturned the long-standing modular view of perceptual processing. Our studies have been among those that have started a shift towards an integrated and interactive paradigm of sensory processing.

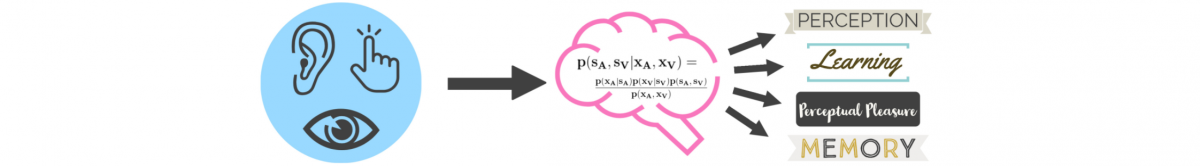

The general goal of our research is to understand the mechanisms and principles of human perception. Human perception in natural environments almost always involves processing sensory information from multiple sensory modalities. Therefore, understanding perception requires understanding multi-sensory integration. Much of our current research investigates learning. Again, learning in natural settings almost always occurs in a multi-sensory environment, so understanding learning requires understanding multi-sensory learning.

Current Projects

Plasticity of Multisensory Integration

Research in the past 20 years has established that cross-modal sensory interactions are ubiquitous across all kinds of sensory processing. One of the most critical and ubiquitous brain computations at the core of perception is the estimation of whether stimuli from different modalities originate from a common source or independent sources and therefore, should be integrated or not. This computation is known as causal inference and is influenced by a number of factors. One of the main factors that influence whether or not signals from different modalities are integrated or not is the prior probability of a common cause or ‘binding tendency’ as we refer to it. It is relatively unknown how malleable this prior expectation is and how, if possible, it can be changed. We are currently investigating how stable, plastic, and domain-specific binding tendency is.

Team: Kimia Kamal, Saul Ivan Quintero

Habit Learning and Extinction

Habit formation and extinction has been a topic of extensive research particularly in animal models. While there has been progress in understanding the neural circuitry involved in habit formation, the factors that influence formation and extinction of habits in humans is still very poorly understood. We are currently investigating this question from a sensory perspective using multiple experimental methods. This research has significant translational and clinical applications.

Team: Saul Ivan Quintero, Puneet Bhargava

Multi-sensory memory

We examine how encoding information from multiple sensory modalities affects subsequent recall or recognition of uni-sensory information.

Team: Arit Glicksohn, Carolyn Murray

Computational Modeling

We developed and test Bayesian models of multi-sensory perception and learning. We use these models to account for empirical findings as well as addressing basic questions about the nature and characteristics of perceptual processing and learning in health and disease.

We recently released to public a beta version of MATLAB toolbox for our Bayesian Causal Inference of multi-sensory perception that can be used for understanding the model, as well as for adopting it to account for experimental data for a variety of tasks. Development of this toolbox was sponsored by the NSF.

Levels of Study

Our research tackles the question of multi-sensory perception and learning at various levels:

Phenomenology: This is to find out how the different modalities interact at a descriptive level. We investigate the phenomenology of these interactions using behavioral experiments.

Brain Mechanisms: This is to find out which brain areas and pathways are involved, in what kind of circuitry (bottom-up, top-down, etc.), and how each area or mechanism contribute to processes of multi-sensory perception and learning. We have been using event related potentials and functional neuroimaging to investigate these questions. We are also collaborating with neurophysiologists making single-unit recordings in awake behaving monkeys.

Computational Principles: This is to find out what the general theoretical rules governing multi-sensory perception and learning are. To gain insight into these general principles, one needs to find a model that can account for the behavioral data. We have been using statistical modeling to gain insight into these rules and principles.

Bayesian Causal Inference Toolbox (BCIT): developed in our lab by Dr. Majed Samad with assistant developer Kellienne Sita, is now in beta release, available at https://github.com/multisensoryperceptionlab/BCIT. It is designed for researchers of any background who wish to learn and/or use the Bayesian causal inference model, and it does not require any computational training or skills.

Methods of Study

Traditional Psychophysics

Virtual Reality: This is a portable and immersive system that allows the subject to move around, inside or outside of the lab, performing daily tasks while the images get altered in real-time and projected to the subject’s head-mounted display, in effect altering the subject’s “reality.” This system can be used to investigate how people adapt to changes in the environment. We are currently using the Meta Quest 2 for our relevant investigations.

Virtual Reality: This is a portable and immersive system that allows the subject to move around, inside or outside of the lab, performing daily tasks while the images get altered in real-time and projected to the subject’s head-mounted display, in effect altering the subject’s “reality.” This system can be used to investigate how people adapt to changes in the environment. We are currently using the Meta Quest 2 for our relevant investigations.

Computational Modeling

Applications of our research

Our research on learning has important implications for education and rehabilitation. We are currently trying to apply our findings and methods to inform the development of clinical interventions for various populations.